If you’ve ever asked ChatGPT to debug your system or explain a weird latency spike, you’ve probably gotten a confident, beautifully worded — and totally useless — answer.

Something like:

“It could be a network issue or database overload. Try optimizing your queries.”

That’s the moment every engineer realizes: general AI models don’t actually understand your system.

They can talk about it — but they can’t see it.

This is where Tetrix comes in.

ChatGPT and Claude are great at language. They’re trained on massive datasets, capable of writing essays, summarizing code, and even helping you brainstorm architecture designs.

But here’s the catch — they operate in isolation.

They don’t have live access to:

Your codebase

Your logs, traces, or metrics

Your service dependencies

Your infrastructure or deployments

That means they can’t reason about your actual system — only about what’s statistically likely in someone else’s.

In technical terms, they lack system context.

And that’s why they miss what really matters: the why behind system behavior.

What “System Context” Really Means

When your app slows down, the root cause isn’t just “the database.”

It could be a deployment that changed API behavior, a misconfigured load balancer, or a spike in a downstream service.

System context is the map that connects all those moving parts — code, infra, and operations — into one coherent story.

Without that context, even the smartest LLM is like a doctor diagnosing a patient without seeing the test results.

That’s the blind spot of ChatGPT and Claude.

Meet Tetrix: The AI That Understands Your System

Tetrix is built to close that gap.

It connects your code, infrastructure, and operations, enabling AI to reason across your entire system — not just within the text of a chat.

Here’s how it works under the hood:

1. Observability Layer

Tetrix integrates directly with your monitoring tools — think Prometheus, Datadog, or OpenTelemetry.

It collects live metrics, traces, and logs to understand what’s really happening inside your system.

2. Knowledge Layer

It builds a System Context Graph — linking services, deployments, code commits, and ownership structures.

Your system isn’t just data anymore — it’s a connected knowledge network.

3. Reasoning Layer

Tetrix combines this graph with LLM reasoning. So when something breaks, it can explain why, not just guess how.

For example:

“Latency increased after auth-service:v2 deployment at 02:14 UTC.

CPU throttling observed on pod-34a.

Redis connection errors spiked concurrently. Root cause: misconfigured connection pool size.”

That’s not speculation. That’s AI with context.

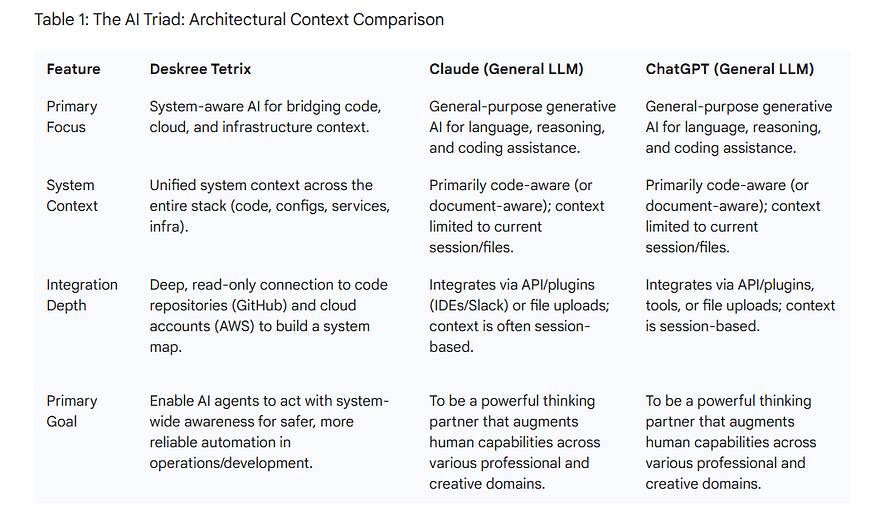

🧠 Tetrix vs ChatGPT vs Claude: Key Differences

While ChatGPT and Claude are designed for general problem-solving, Tetrix is built for system-level intelligence — the kind needed by engineers, DevOps teams, and AI operators.

Real Example: Debugging a Latency Spike

Let’s say your fintech app suddenly starts lagging during peak hours.

ChatGPT says:

“High latency could be caused by a slow database, network congestion, or high load.”

Tetrix says:

“Latency spiked after risk-engine:v3.2 deployment.

Feature extraction time increased due to an unoptimized data join in feature_store.py.

CPU throttling detected on worker-node-07.

Suggested fix: optimize feature caching or revert deployment.”

See the difference?

ChatGPT gives advice.

Tetrix gives answers.

The Future of AI Is Context-Aware

The next evolution of AI isn’t about making models bigger — it’s about making them aware.

Awareness means:

Knowing what’s deployed and where.

Understanding how one microservice impacts another.

Seeing metrics and logs as part of one system-wide story.

That’s what Tetrix enables: an AI that truly thinks inside your system instead of just talking about it.

It’s the shift from conversational AI to reasoning AI.

Why System Context Matters More Than Ever

Modern software stacks are complex, distributed, and constantly changing.

When something breaks, the answer isn’t in a single log file — it’s scattered across dozens of services and dashboards.

LLMs like ChatGPT and Claude can’t connect those dots.

Tetrix can.

It doesn’t just summarize information — it correlates it.

It doesn’t just describe incidents — it diagnoses them.

That’s why leading AI-driven teams are moving toward context-aware intelligence — the kind that sees what’s happening, understands why, and helps you act fast.

Closing Thoughts

The difference between ChatGPT and Tetrix isn’t just about features — it’s about philosophy.

ChatGPT can tell you how systems work.

Tetrix tells you why yours isn’t.

In a world where milliseconds matter and complexity keeps growing, AI needs more than words — it needs context.

Enable Your AI to Reason Across the Entire System

Tetrix connects code, infrastructure, and operations to your AI, enabling it to reason across your full software system. Gain context-aware intelligence for faster debugging, smarter automation, and proactive reliability.

👉 Sign up or book a live demo to see Tetrix in action.