Editor’s note

Welcome back to Future of DevEx, where we explore how AI, infrastructure, and developer workflows are converging.

This week’s issue looks at a major shift in AI: Anthropic and OpenAI both introduced ways for AI to understand your company’s internal systems — moving from assistants to true context engines.

We’ll break down why they’re doing it, what it means for engineering teams, and how it changes the roadmap for every enterprise tool.

OPEN AI AND ANTHROPIC WANT CONTEXT 📚

On October 16, Anthropic announced Claude Skills — a framework that lets organizations define company-specific capabilities and data connections that Claude can invoke across internal tools.

Days later, OpenAI followed with Company Knowledge — allowing ChatGPT customers to connect Slack, Google Drive, GitHub, SharePoint, and more directly into the AI’s workspace.

Different companies, same trajectory.

The era of system-aware AI has officially begun.

WHY THIS IS HAPPENING 🧐

Enterprise AI has reached a plateau.

Nearly every organization uses LLMs in some form, but the results feel underwhelming — not because the models are weak, but because work itself is still fragmented.

Teams are not moving slow because of hallucinations, decision-making, or bad tools. The real bottleneck lies in context sharing. As Brad Lightcap put it:

so much of work is what I like to call the “work around work” - switching between systems, chasing context, and stitching tools together to get things done

That same friction limits AI too.

It spends its time retrieving data, not reasoning with it.

It forgets what it just learned, forcing users to start over every time.

Both Anthropic and OpenAI realized that context — not just compute — is the real bottleneck to AI adoption and enterprise ROI.

The solution? Give AI persistent, shared knowledge of how a company operates — and let it work from there.

Their moves serve four strategic goals:

Fix the accuracy gap. Connecting AI to live company data turns it from a guessing machine into a reasoning engine.

Lock in enterprise gravity. Once your company’s context lives inside Claude or ChatGPT, they have no reason to switch. Productivity boost is so good, it overweights the token costs that come with it (which is exactly what Open AI and Anthropic want).

Win on trust. Both features are paired with strict data governance, permission models, and zero-training guarantees — the credibility layer enterprises have been waiting for.

Capture the new platform layer. Whoever controls system context becomes the operating system for enterprise AI.

This is less about assistants — and more about owning the architecture of enterprise knowledge.

WHAT THIS MEANS FOR OTHER COMPANIES 🧠

Until now, companies treated AI as a layer on top of existing systems.

Now, AI is becoming the interface between them.

Here’s the shift in motion:

From dashboards to reasoning. AI can correlate events and analytics instead of waiting for human interpretation. Changes how we interact with all UI-heavy tools.

From meetings to decisions. Shared context reduces the overhead of status updates, cross-team reviews, and knowledge transfer.

From tools to ecosystems. Once your company knowledge is unified, AI can answer questions that used to require you running between 3 departments and juggling across 5 systems at once.

System context is now the real competitive edge. Tools that were used daily are turning into data sources that feed into your chat bot.

WHAT THIS MEANS FOR PRODUCTS 🤓

For anyone building SaaS or developer tools, the message is clear:

If your platform stores enterprise data, you must make that data AI-readable.

Here’s what’s changing:

Context over content. It’s not enough to expose data — AI needs relationships, lineage, and dependencies.

Security becomes product design. Fine-grained access, traceability, and auditability are no longer compliance afterthoughts; they’re selling points.

Integration is existential. ChatGPT and Claude will become the new surfaces of enterprise workflows. If your tool isn’t connected, it’s invisible.

Agents are coming. AI won’t just read your product — it will operate it. Designing APIs for machine interaction is now table stakes.

This moment mirrors the early days of APIs in the 2010s — except this time, AI is the client.

HOW THIS EXPANDS ACROSS TEAMS 💬

Engineering

Both Open AI and Anthropic are still far from giving system awareness for engineering teams. But the impact of this will be huge.

Imagine asking:

“Which services are over-provisioned?”

“What’s causing latency in checkout?”

“Are frontend and backend changes aligned for the next release?”

AI can only answer these once it understands how your repos, infra, and observability link together.

That’s what the next wave of engineering intelligence tools — including Deskree — is building toward.

Operations & Compliance

Audit prep becomes queryable.

Instead of searching Confluence, Jira, and emails, AI can trace controls, owners, and evidence through a single graph.

Finance & Product

AI will forecast cost trajectories, identify system inefficiencies, and connect them directly to user impact.

Customer & Support

Company knowledge models will surface precise, context-aware answers drawn from the actual system, not canned macros.

In short: the “copilot” phase is over.

The contextual reasoning phase has begun.

THE SHIFT: FROM TOOLS TO INFRASTRUCTURE 🛠️

When company knowledge lives in one connected system, AI stops being a feature and becomes infrastructure.

It eliminates redundant syncs and status meetings — because the context already exists.

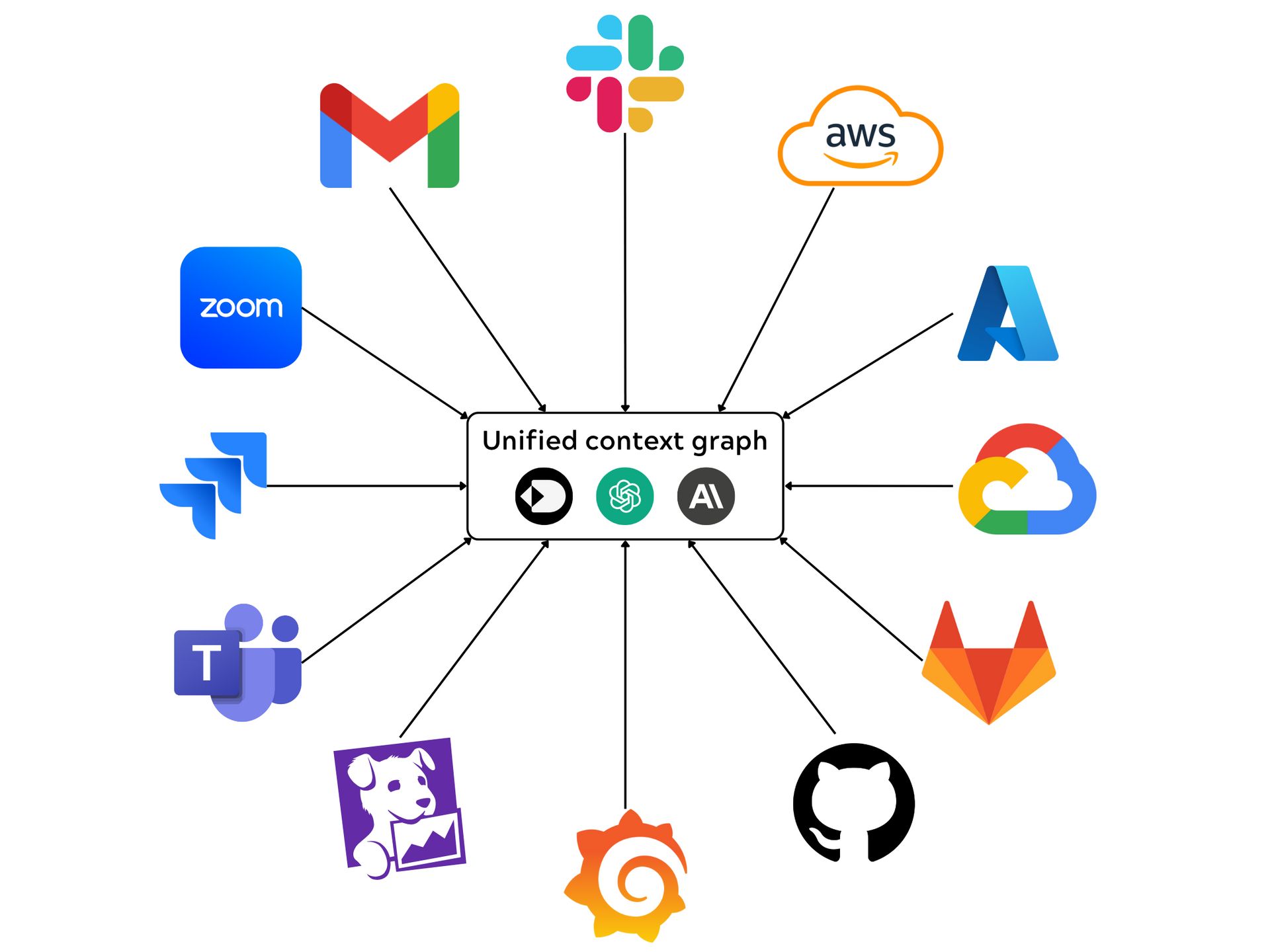

Both OpenAI and Anthropic are showing what the next layer of enterprise architecture looks like: the context graph.

THE TAKEAWAY 💡

The future is context-first.

If your product holds data, your next milestone is making that data usable by AI — safely, with governance and lineage intact.

Within the next year, enterprise buyers won’t ask what your software does.

They’ll ask what your AI understands.

And if your competitor’s product can answer — that’s the sale.

COMMUNITY SPOTLIGHT 🚀

Over the past few months, 1000+ engineers attended our Future of DevEx events across Toronto, NYC, SF, and LA, alongside partners like Vercel, Grafana, Arthur AI, and PostHog.

Across hundreds of conversations, few things stood out the most:

AI without system context doesn’t scale.

Visibility beats volume.

Developer tools are evolving into reasoning layers.

Every city reached the same conclusion:

AI won’t replace engineers — but engineers with system-aware AI will replace everyone else.

Future of DevEx event series continues, and we’ll be sharing our next stop with you very soon!

If you joined us at one of the events, check out the photo gallery and relive some of the highlights. And if you’re posting on socials, tag your moments with #FutureofDevEx — we’ll be sharing our favorites.

Till next time,