Editor's Note

This month’s deep dive focuses on the hardest problem in agent development right now: getting agents to actually work in production.

2026 is shaping up to be the year of agents. Not demos. Not copilots. Real systems making decisions, calling tools, moving data, and touching money. But as more teams ship agents, a pattern is emerging: the output in production must be improved. Let’s break down why this happens, and what actually works.

The big picture ✨

Agent adoption is accelerating faster than almost any prior developer platform shift.

Tech giants like Google and Microsoft are rapidly adding agents across their product suite. Enterprises like SalesForce and Amazon are positioning agents as first-class primitives for enterprise automation. Internally, companies like OpenAI, Scale AI, Replit, and Block are already running large parts of their operational workflows through agents.

This isn’t speculative. By 2026, a significant portion of enterprise applications will include at least one agent making decisions, orchestrating systems, or executing workflows autonomously.

But there’s a growing gap between adoption and operational maturity.

Teams can build agents quickly. Shipping them safely, cheaply, and reliably is a different problem entirely.

How agents are typically built today 🛠️

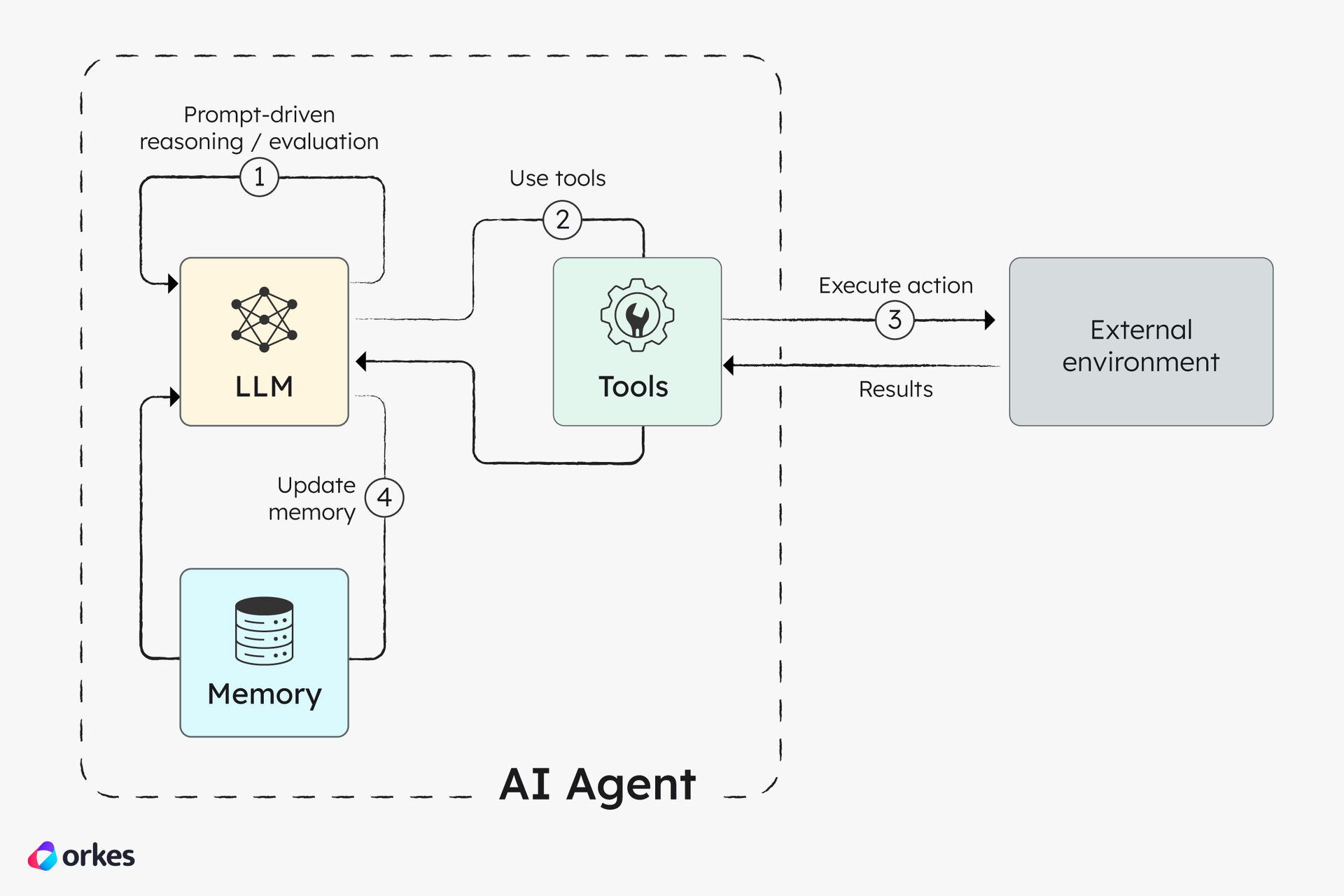

Most production agents follow a similar blueprint:

One or more foundation models (often mixed for cost and reasoning)

Tool calling (APIs, databases, internal services)

Retrieval (RAG) for grounding

Some notion of memory or state

This stack is now well understood. What’s less understood is that this architecture alone does not produce reliable systems.

Agents are not just smart functions. They are long-running, stateful, probabilistic systems operating across multiple dependencies. The failure modes are fundamentally different from traditional services.

Why agents struggle in production ⚠️

Hallucinations don’t disappear. They accumulate

Every reasoning step, tool call, or retrieval is another chance for the agent to make a confident but incorrect assumption. These errors compound silently. By the time a failure is visible, the root cause is often buried several steps earlier.

Reasoning models intensify this problem. Longer chains mean more surface area for drift, and debugging “well-reasoned nonsense” is significantly harder than catching a bad API response.

Costs stack up

Token costs scale non-linearly in agent systems.

A single request might trigger:

multiple agents

repeated reasoning loops

duplicated context

retries across tools

What looked like cents per request in testing can turn into thousands per day in production. Teams consistently underestimate this until it hits a budget threshold.

Orchestration multiplies failure rates

Even with highly reliable components, composed systems are fragile.

Agents depend on:

model inference

external APIs

retrieval systems

tool schemas

state transitions

Each dependency adds a failure mode. Add retries and loops, and you get systems that technically “ran” but failed to complete the task correctly, and that’s the hardest class of failure to detect.

Improving agent performance in production 📈

This is the primary focus for a lot of companies this year. Agent adoption is widespread, but making them perform consistently well in real environments is the next step.

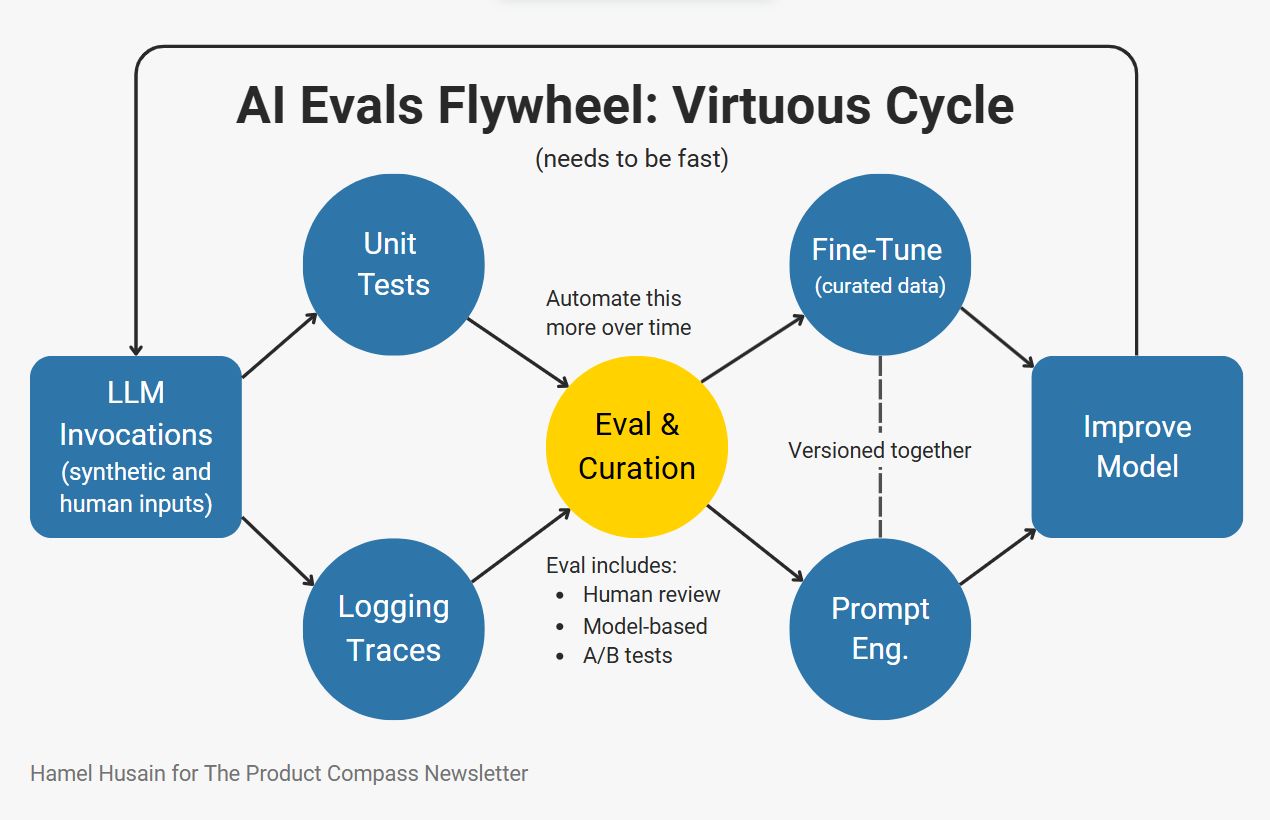

1. Evaluation is not optional

The single biggest mistake teams make is treating evals as add-on.

Production agents need continuous, multi-level evaluation:

Did the task complete?

Were the correct tools used?

Were intermediate decisions valid?

Did the agent recover appropriately from failures?

This is why evaluation-first platforms like Braintrust and Arthur matter. They allow teams to:

turn production failures into regression tests

score agent behavior across steps, not just outputs

catch silent quality degradation early

Measuring agent behaviour is the first crucial step for reducing hallucinations and making agents more resilient.

2. Context engineering matters more than prompts

Prompt tuning has diminishing returns. Context discipline doesn’t.

High-performing agents tightly control what enters the context window:

separating system instructions from retrieved data

summarizing history instead of replaying it

isolating sub-tasks into separate contexts

offloading long-term state to external memory

Context isolation is especially powerful for agents running in parallel. Let sub-agents operate independently with narrow context, then synthesize results. This reduces interference and improves reasoning quality, even if total token usage increases.

3. System context matters

Context isolation doesn’t mean agents should be ignorant of the system they operate in. A new trend is emerging around global system context: giving agents access to deterministic, structured knowledge about the system itself.

Most agents reason over static or isolated information about the system. A coding agent might not be aware that frontend is not ready for new API change, and this lack of system awareness ultimately leads to broken code in production.

Agents perform dramatically better when they have access to real-time knowledge of the system:

what services exist

how they connect

what depends on what

what is safe to modify

what actions are allowed

Crucially, this knowledge is retrieved deterministically, when needed. Companies like Deskree model applications as a knowledge graph that accounts for all system components and their relationships. Agents operate with scoped local context, but can query this graph to ground decisions in reality.

This process reduces hallucinations, improves tool selection, and improves quality of output in production.

4. Design agents to fail safely

Production agents must assume failure.

That means:

explicit termination conditions

bounded retries

least-privilege tool access

human-in-the-loop gates for high-risk actions

kill switches

Prompt injection, partial outages, and malformed inputs are not edge cases. They are normal operating conditions.

The goal isn’t full autonomy. It’s controlled autonomy.

5. Observability must explain behavior

Traditional monitoring tells you uptime. Agent observability must tell you why something happened.

Teams need traces that show:

reasoning steps

tool calls and parameters

context inputs

latency and cost per decision

divergence between expected and actual outcomes

Without this, teams resort to superstition: swapping models, adding retries, inflating prompts. None of these reliably fix the root problem.

What actually works (and what doesn’t)

What works:

small, specialized agents coordinated by workflows

multi-model routing instead of one “smart” model

aggressive context control

deterministic system knowledge

turning production failures into evals

What doesn’t:

bigger prompts

unlimited reasoning loops

passing full histories everywhere

hoping retries fix correctness

treating agents like APIs

The takeaway 🧐

Agents are becoming a new layer of software infrastructure: probabilistic, stateful, and expensive when mismanaged.

The teams succeeding with agents in production are invested in:

evaluation

context engineering

system knowledge

observability

safety

Agents don’t fail because they lack intelligence.

They fail because we haven’t been engineering them like production systems.

What’s your approach to improving agents in production? Share your insights or questions in the comments to this post!

Want to Get Featured?

Want to share your dev tool, research drops, or hot takes?

Submit your story here - we review every submission and highlight the best in future issues!

Till next time,